Using PL/pgSQL to Calculate New Postgres Columns

Steve Pousty

8 min readMore by this author

In our last blog post on using Postgres for statistics, I covered some of the decisions on how to handle calculated columns in PostgreSQL. I chose to go with adding extra columns to the same table and inserting the calculated values into these new columns. Today’s post is going to cover how to implement this solution using PL/pgSQL.

Why PL/pgSQL?

I bet your first question is going to be: Why did you choose to do this in PL/pgSQL rather than just some quick editor work?

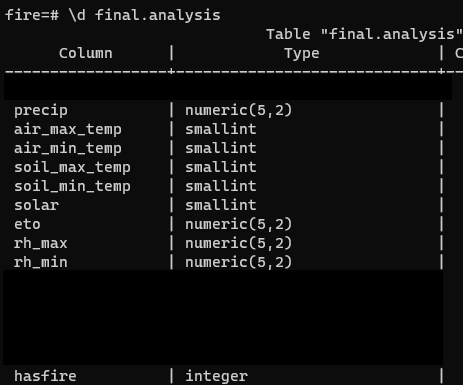

I actually started with handcrafting all the data definition language (DDL) and SQL statements and quickly changed my mind. Just to remind you, here are all the explanatory columns we have in our fire dataset:

Hasfire is the variable we are trying to predict (the occurrence of a fire) and all the other columns are the potential explanatory variables.

Reasons to Replace Copy and Paste with Code

I tried to use code to make the new calculated columns because:

- Not only would I have to make 9 new table columns but also 9 different

INSERTformula columns. All that is a great opportunity to put a typo in a column name or a formula. It is also a lot of copy and pasting. - Calculating Z-scores is a standard step in many data science workflows. By writing a PL/pgSQL function, I could simply repeat calculating columns in any new analysis by using the function. It would also be available to other data scientists to use as well.

- I need to learn more PL/pgSQL and this seemed like an interesting problem. The function was going to have to run some DDL and some SQL.

Design Considerations

As mentioned above, I wanted the function to be generic so I could re-use it in other places. I also decided that it would be best to retain the original column name in the calculated column name. Finally, I wanted to handle all the columns at once, not a function for a single column at a time.

Implementation

With these constraints in mind, I came up with a function that accomplishes all 3 goals. To see the entire function, it's on github, but for the blog post I am going to break it down into sections.

PostgreSQL Function Signature

Let’s start with the function signature:

CREATE OR REPLACE FUNCTION final.initial_center_standardize(schema_name TEXT, table_name TEXT, prefix TEXT, column_names TEXT\[\], pkey TEXT) RETURNS TEXT as

The beginning of the statement is just normal SQL to create a new function. Notice I am actually creating this function inside the schema name “final”. The first two parameters get the schema and table name for the table where we are going to add the columns.

The next parameter is going to be the prefix for the new calculated column name. The next parameter is an array of text values (which is a data type in PostgreSQL). The array contains the name of all the columns for which we want Z-scores. Finally, we need the name of the primary key column for one of the queries used in the function.

I just return a text field because none of this work will return any data values, but I like to show the caller if the call worked. I usually return “done” or “finished” for success and “error” with some text explaining what went wrong.

Just to be clear, for those new to PL/pgSQL programming, I did not come up with this signature at the beginning. Some of the needed parameters only became apparent as I built the rest of the function.

Loop with Iterators in PL/pgSQL

With the signature done, we first declare some variables we will need later in the function. PL/pgSQL requires you to pre-declare any variables you use in a block.

The next step is to create a foreach loop to give us access to each individual element in the array of column names we passed in.

FOREACH col IN ARRAY column_names

LOOP

Like a normal looping and iterator pattern, this code will extract the first element in the array and give that element the name ‘col’ in the body of the loop. It will continue to iterate through array elements until there are no more elements in the array. This is perfect for our work, each column is going to get the same treatment.

Creating the New Columns

Next our code takes on the task of creating the new column to hold the Z-score. First we create the new column name and storing it in a variable:

new_col_name := prefix || col;

We take the prefix passed in and concatenate it ("||" in SQL) to the original column name. I like using the same prefix before each column because it makes it clearer which columns are the raw columns and which have the Z-score.

Now we do our actual SQL to create the new column in the same table:

EXECUTE FORMAT ('ALTER TABLE %I.%I ADD COLUMN %I NUMERIC', schema_name, table_name, new_col_name);

Normally PL/pgSQL will cache the query plan for a SQL statement. In our case, this statement is going to change each time we run through the loop, making our query dynamic. For dynamic queries you use EXECUTE to tell the PostgreSQL query planner not to cache the query.

To make sure we do SQL safe variable substitution, we use the FORMAT command. The %I in the SQL statement indicates we need this value to be treated like a SQL identifier (i.e. put " around a capitalized table name or escape an ' in a string value). This is the same behavior as the quote_ident command.

Then, by default, the parameters are substituted into the string in the order they appear at the end of the FORMAT command. So if:

- schema_name = final

- table_name = analysis

- new_column_name = z_precip

Then the following statement will be executed:

ALTER TABLE final.analysis ADD COLUMN z_precip NUMERIC

In the specific case of our example we can get away with making every column NUMERIC because Z-scores are always a decimal number regardless of the number type of the input. And with that, we have created our new column in the table.

Populating the Data Values using PL/pgSQL

We have a new column but there is no data in it. Let’s solve that now. Here is the statement I used to calculate the Z-score for the new column (formatted for readability):

EXECUTE FORMAT(

'WITH summary AS ( SELECT AVG(%1$I) AS avg, STDDEV(%1$I) AS stddev FROM %3$I.%4$I), ' ||

'final_select AS (SELECT %5$I, (%1$I - avg)/stddev AS centered FROM %3$I.%4$I CROSS JOIN summary) ' ||

UPDATE %3$I.%4$I SET %2$I = final_select.centered FROM final_select WHERE final_select.%5$I = %3$I.%4$I.%5$I ', col, new_col_name, schema_name, table_name, pkey);

Again we use the EXECUTE FORMAT so the planner doesn’t cache the query and we pass in new variables with each iteration of the loop. The actual SQL has two CTEs to calculate the Z-score and then an UPDATE statement to insert the Z-score into the right table and column.

Because we are reusing parameters in the SQL, we have to use a slightly different syntax for the variable substitution (highlighted in orange). Now we have variables like %1$I rather than just %I. This new notation means take the first parameter from all the parameters passed in to FORMAT. If we had written the query the previous way, we would have passed in the same parameters multiple times in the function call. This numbered reference to parameter format is much cleaner.

The first CTE (summary) calculates the average and standard deviation for the current column in the loop. We take those values and use them in the second CTE (final_select). By using the CROSS JOIN in this query we can use the avg and stddev in the Z-score calculation for each row in the data set. The final query is just updating the new column table with the Z-score where pkey in the final_select matches the pkey of the table.

Closing Out a PL/pgSQL Function

The creation of a new column and populating the values continues for each item in the iteration loop. We terminate the loop with the END LOOP statement. We then return ‘done’ to the SQL call that used the function. Again, I like returning this string because it allows the function user to know if the function actually finished, or it is taking a long time. You could change this to return NULL if you want.

Wrap Up

There you have it, my cool little function. I know it may have looked complicated at first glance, but now that we are finished you can see there are only 2 SQL lines to carry out all our work. Think of how much copy and pasting this saved!

The other big benefit is now I can reuse this function in any database in the future. I can control access to it by using schema permissions. As a matter of fact, I could add this function as part of a template. Then every database created in our hypothetical data science PostgreSQL cluster would have access to this function. I could give data scientists with permission to make this call and quickly calculate Z-scores without even having to know all the PL/pgSQL.

Finally this example could easily be modified to create a new table with new columns for the Z-scores. It could also be quickly modified to use an auxiliary table where the average and standard deviation for each predictive variable is stored. This was one of the possible solutions I explained in the previous post that would probably help overall performance.

I hope you learned something new in this post. Maybe you have already done some PL/pgSQL to alter tables. What kind of use cases did you have? What are something of the interesting twists you uncovered. I would love to hear your thoughts, please sent them to the Crunchy Data twitter account. Happy function writing!